Rubric Drift: Standardize Java vs Python Bars in Screening

A playbook for keeping your technical bar consistent across languages without slowing the funnel or inflaming bias risk.

If your rubric changes with the language, you do not have a bar. You have a committee argument waiting to happen.Back to all posts

Offer committee collapses under rubric drift

It is Thursday night, offer committee tomorrow. Two finalists are tied on team feedback. One is a Java engineer, one is a Python engineer. The Java candidate gets dinged for "verbosity" and "ceremony." The Python candidate gets praised for "clean code" and "speed." No one can explain, with evidence, whether both candidates actually met the same bar on correctness, performance, and testing. By the end of this article, you will be able to implement a language-normalized rubric and calibration loop so Java and Python candidates are held to the same hiring standard, with defensible evidence and minimal funnel drag.

What is actually breaking in cross-language screens

Most teams think they have a rubric. In practice, they have a shared doc plus unwritten preferences that change with the language and reviewer. Java reviews often overweight structure, patterns, and type safety. Python reviews often overweight brevity, library fluency, and "pythonic" style. The same underlying capability gets scored differently. This is rubric drift: a process defect that shows up as committee churn, inconsistent pass-through rates by language, and weak audit defensibility.

Higher reviewer disagreement rates on "cleanliness" and "seniority" dimensions.

Language-specific pass rates that do not match your sourcing mix.

More "one more interview" decisions caused by low confidence in earlier scoring.

Ownership, automation, and sources of truth

If you do not assign ownership, rubric debates turn into culture wars. Set a clear operating model before you tune dimensions. Owner: Recruiting Ops owns the rubric template, calibration cadence, and scorecard hygiene. Hiring Managers own role-specific must-haves. Security/Compliance approves retention and access controls. Automate repeatable controls (verification gates, baseline scoring capture, anomaly flags, Evidence Pack generation). Reserve humans for disagreements, borderlines, and appeals. Sources of truth: ATS for stage progression and dispositions. IntegrityLens for verification events, interview and assessment artifacts, rubric scoring, and Evidence Packs.

Automated: Risk-Tiered Verification, assessment delivery, structured score entry requirements, idempotent webhook updates into the ATS.

Manual: adjudication when per-dimension scores diverge, suspected AI-assisted cheating review, candidate appeal handling using code playback.

Why Analytics and Chiefs of Staff get pulled into this

You will be asked to explain screening outcomes when a miss-hire happens, when an offer declines due to perceived unfairness, or when Legal asks how consistent your bar is across candidates. Rubric drift creates avoidable cost through rework and additional interviews, slows time-to-decision via committee churn, and raises reputation risk when candidates feel judged on style instead of outcomes.

31% of hiring managers report interviewing someone who later turned out to be using a false identity (Checkr, 2025). Directionally, this implies integrity controls and defensible evidence matter in modern hiring. It does not prove your org has the same exposure or that rubric drift causes identity fraud.

1 in 6 applicants to remote roles showed signs of fraud in one real-world pipeline (Pindrop). Directionally, remote screening needs controls that scale and produce reviewable artifacts. It does not mean 1 in 6 of your applicants are fraudulent or that every anomaly is cheating.

The rubric model that survives Java vs Python

Standardize on observable behaviors, not syntax. Use 5 language-agnostic dimensions: correctness under spec, complexity tradeoffs, test strategy, debugging and iteration behavior, communication and reasoning. Then add a thin language guidance layer to prevent a "style tax." Style can influence the score only if it changes correctness, maintainability, or bug risk. Require per-dimension evidence notes tied to artifacts (test output, code playback timestamp, transcript excerpt). This is what makes your rubric defensible in disputes.

Java: explicit error handling and clear interfaces can reduce bug risk. Do not reward ceremony for its own sake.

Python: concision is fine if clarity remains high. Require explanation for dense one-liners that hide edge cases.

Implementation steps you can run next week

Define anchors, not adjectives. Replace "strong" with observable anchors like "passes hidden tests and explains edge cases." Provide one Java and one Python example per anchor.

Normalize by role level. Separate junior, mid, and senior expectations so a Java solution does not get "promoted" for looking enterprise-grade.

Make per-dimension scoring mandatory. Overall scores hide drift and inflate false confidence.

Calibrate on variance, not averages. Every 2 weeks, have reviewers score two anonymized submissions independently and inspect per-dimension spread.

Add an adjudication and appeal workflow. Use code playback as the primary evidence to resolve disputes quickly.

Pair rubric consistency with integrity controls. Verify identity pre-screen and capture consistent artifacts so a proxy cannot tailor performance to a specific interviewer.

Limit evidence notes to one sentence per dimension and force an artifact link.

Auto-trigger adjudication only when spread is high (example: 2+ points on a 4-point scale).

Use async-first prompts so candidates answer at their best time and reviewers compare like-for-like responses.

Anti-patterns that make fraud worse

- Letting each interviewer pick their own question, then pretending scores are comparable. - Treating rubric notes as optional, then trying to reconstruct decisions after an escalation. - Over-indexing on code style as a proxy for quality, which proxies can mimic and strong candidates can reasonably differ on.

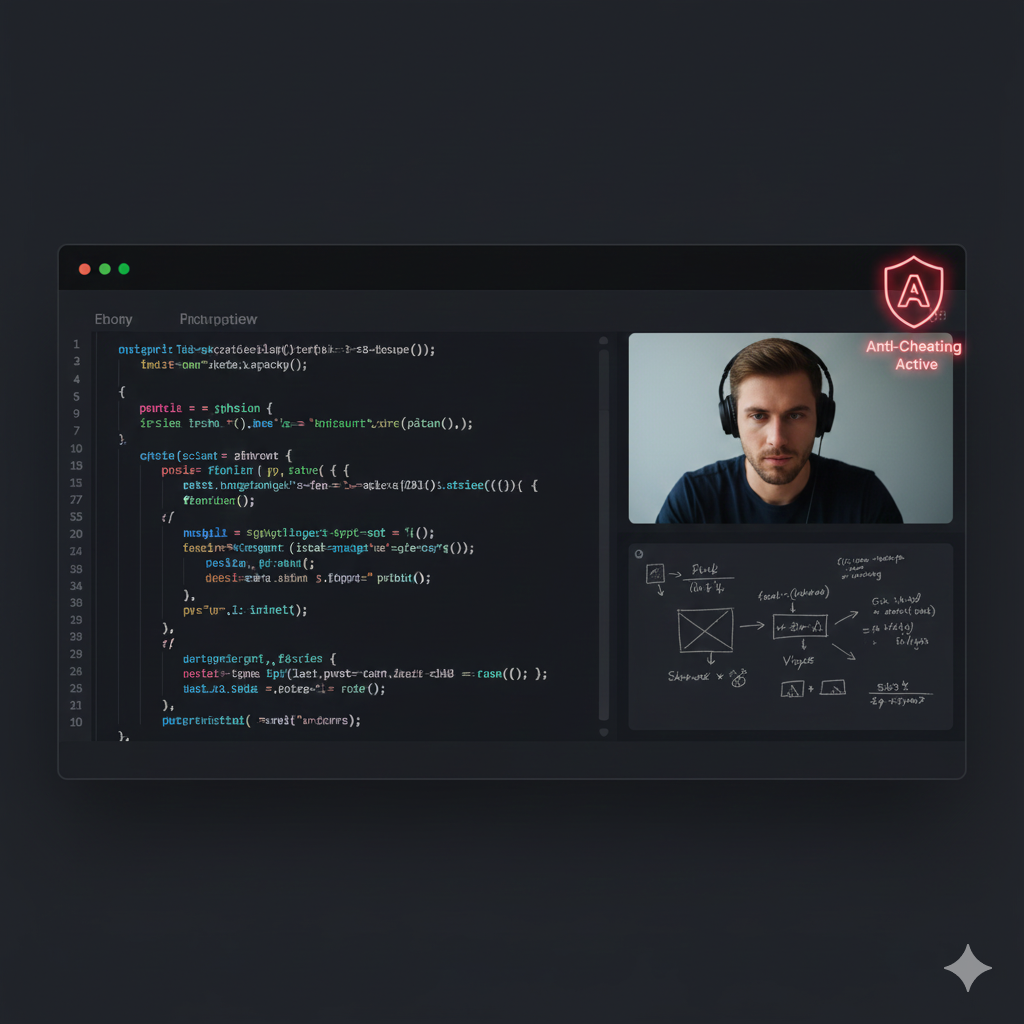

Where IntegrityLens fits

IntegrityLens AI is the first hiring pipeline that combines a full Applicant Tracking System with advanced biometric identity verification, fraud detection, AI screening interviews, and technical assessments. It helps teams standardize Java vs Python scoring by making evidence capture automatic and decisions reproducible across reviewers and time zones. TA leaders, recruiting ops, and CISOs use IntegrityLens to keep the lifecycle in one secure platform: Source candidates - Verify identity - Run interviews - Assess - Offer. Key capabilities relevant here: - Risk-Tiered Verification that completes before interviews (typical document + voice + face in 2-3 minutes, under three minutes in many flows). - 24/7 async AI screening interviews for consistent prompts and comparable transcripts. - Coding assessments across 40+ languages with code playback for dispute resolution. - Evidence Packs that package prompts, artifacts, rubric scores, and verification event IDs for audits.

Artifact you can drop into your screening ops

Use this YAML as a starting policy for a language-normalized rubric. It encodes dimensions, anchors, required evidence, and an adjudication trigger based on score spread.

Outcome proof you can reasonably expect

Before: committee time gets burned debating style, reviewers cannot reconcile Java vs Python scores, and escalations become opinion fights because the artifacts are thin. After: per-dimension anchors reduce variance, adjudication routes only true disagreements, and hiring managers see Evidence Packs that show how the candidate performed, not how the reviewer felt. Illustrative example (not a claim): over 2-3 calibration cycles, teams often target reducing adjudication volume by clarifying the highest-variance dimensions and tightening anchor language.

Sources

Related Resources

Key takeaways

- Rubric drift is an ops problem, not an interviewer personality problem - fix it with anchored criteria and calibration.

- Standardize on observable behaviors (correctness, complexity, testing, debugging, communication) and map language-specific signals to those behaviors.

- Require an Evidence Pack per candidate (prompt, code playback, test results, rubric scores, reviewer notes) to survive disputes and audits.

- Use async-first screening to reduce scheduling bias and make calibration easier across time zones.

- Automate the repeatable parts (identity verification, baseline scoring, anomaly flags) and reserve humans for exceptions and disagreements.

Encodes language-agnostic dimensions, scoring anchors, and required evidence artifacts.

Includes adjudication triggers and verification requirements so disputes are resolved with code playback, not opinions.

rubricPolicy:

policyId: "lang-normalized-v1"

appliesToRoles:

- family: "Software Engineering"

levels: ["L2", "L3", "L4"]

supportedLanguages: ["java", "python"]

scoringScale: {min: 1, max: 4}

dimensions:

- id: "correctness"

weight: 0.30

anchors:

"1": "Fails core requirements; cannot explain assumptions."

"2": "Happy path works; misses common edge cases; unclear constraints."

"3": "Meets spec; handles edge cases; explains assumptions."

"4": "Exceeds spec; anticipates malformed input and constraints; validates approach."

requiredEvidence:

- "hidden_test_summary"

- "candidate_edge_case_notes"

- id: "complexity"

weight: 0.20

anchors:

"1": "Chooses inefficient approach without awareness."

"2": "Mentions complexity but cannot justify tradeoffs."

"3": "Chooses appropriate complexity and can justify."

"4": "Optimizes based on constraints; proposes alternatives and risks."

requiredEvidence:

- "time_space_explanation"

- id: "testing"

weight: 0.20

anchors:

"1": "No tests or edge cases; guesses."

"2": "Basic tests; misses boundary conditions."

"3": "Covers boundaries; uses tests to validate changes."

"4": "Systematic strategy; targets failure modes; explains coverage gaps."

requiredEvidence:

- "candidate_test_cases"

- id: "debugging"

weight: 0.15

anchors:

"1": "Stuck; cannot isolate issues."

"2": "Fixes symptoms; limited iteration reasoning."

"3": "Iterates with hypotheses; uses outputs/logs effectively."

"4": "Diagnoses quickly; explains root cause and prevention."

requiredEvidence:

- "code_playback_timestamps"

- "iteration_notes"

- id: "communication"

weight: 0.15

anchors:

"1": "Cannot explain approach."

"2": "Explains steps but not rationale."

"3": "Explains rationale and tradeoffs clearly."

"4": "Communicates constraints, risks, and alternatives crisply."

requiredEvidence:

- "ai_interview_transcript_excerpt"

languageGuidance:

java:

styleNotes:

- "Do not reward verbosity unless it reduces bug risk (eg explicit error handling)."

- "Accept idiomatic use of collections/streams; score behavior, not framework preference."

python:

styleNotes:

- "Do not reward concision unless correctness and clarity remain high."

- "Accept idiomatic comprehensions; require explanation of tricky one-liners."

controls:

identityVerification:

requiredBeforeStage: "technical_screen"

mode: "risk-tiered"

evidencePackRequired: true

reviewRules:

reviewersPerCandidate: 2

requiresPerDimensionScores: true

adjudication:

trigger: "dimension_score_spread_gte_2"

owner: "recruiting_ops"

outputs:

evidencePackIncludes:

- "prompt_and_constraints"

- "language_selected"

- "final_code"

- "code_playback"

- "test_results"

- "rubric_scores_and_notes"

- "verification_event_ids"

Outcome proof: What changes

Before

Technical screens were inconsistent across languages. Committees spent time debating style, and escalations were hard to resolve because per-dimension evidence was thin.

After

Rubrics were anchored on language-agnostic behaviors with a small language guidance layer. Disagreements routed to adjudication using code playback and Evidence Packs, reducing committee churn and improving decision defensibility.

Implementation checklist

- Pick 4-6 rubric dimensions that are language-agnostic and observable.

- Define anchors for each score level with concrete examples in Java and Python.

- Instrument reviewer variance: per-dimension spread, not just overall score.

- Set a calibration cadence and a dispute workflow with code playback as the primary artifact.

- Require Evidence Packs for every screen that reaches hiring manager review.

Questions we hear from teams

- How do we handle candidates who choose different languages for the same task?

- Allow language choice, but keep prompts and constraints identical. Score only on the shared dimensions, and use the language guidance layer to prevent a style tax. Require the same Evidence Pack artifacts regardless of language.

- What should we do when reviewers disagree sharply?

- Trigger adjudication only on high-spread dimensions. The adjudicator re-scores the disputed dimensions using anchors and the candidate artifacts (tests, code playback, transcript excerpt). The goal is to fix rubric clarity, not "pick a winner".

- Will this slow down hiring?

- If implemented correctly, it removes downstream churn. Per-dimension scoring adds a small amount of structured work, but it prevents repeat interviews and committee rework by making early screens comparable and defensible.

Ready to secure your hiring pipeline?

Let IntegrityLens help you verify identity, stop proxy interviews, and standardize screening from first touch to final offer.

Watch IntegrityLens in action

See how IntegrityLens verifies identity, detects proxy interviewing, and standardizes screening with AI interviews and coding assessments.