Time-to-Hire Gains: Instrumenting Speed Without Missing Fraud

A complete hiring lifecycle playbook for PeopleOps leaders who need faster hiring, lower risk, and reporting that survives audit questions.

If you cannot show where time was saved and what evidence supports risk decisions, you do not have speed. You have drift.Back to all posts

The board question you will get after a fraud scare

When a fraud incident hits the rumor mill, your cycle time metrics stop being a feel-good KPI and become a governance artifact. Leadership will ask two things in the same breath: "How fast can we hire?" and "How do we know this person is who they say they are?" You are managing speed, cost, risk, and reputation simultaneously. If your reporting cannot connect those dimensions to a single candidate timeline, you will end up debating anecdotes instead of operating the funnel.

Speed: headcount plans slip, teams burn out, and revenue targets take the hit

Cost: recruiter and interviewer time becomes an untracked queue

Risk: identity fraud, proxy interviews, and AI-assisted cheating can bypass weak handoffs

Reputation: candidates and hiring managers lose trust when processes look inconsistent

Why speed metrics alone create blind spots

Two approved data points help calibrate the risk conversation. Checkr reports that 31% of hiring managers say they have interviewed a candidate who later turned out to be using a false identity. Directionally, this implies identity risk is not rare in day-to-day hiring operations. It does not prove your org's exact rate, nor does it identify which industries or role types are most affected without deeper segmentation. (https://checkr.com/resources/articles/hiring-hoax-manager-survey-2025) Pindrop reports 1 in 6 applicants to remote roles showed signs of fraud in one real-world pipeline. Directionally, this suggests remote hiring increases attacker surface area and incentives. It does not mean 1 in 6 of your applicants are fraudulent, because detection criteria, role mix, and channel mix vary widely. (https://www.pindrop.com/article/why-your-hiring-process-now-cybersecurity-vulnerability/) The operational takeaway: a time-to-hire initiative that does not measure fraud outcomes is incomplete. You may be accelerating the exact stage where your controls are weakest.

Lower stage-level wait time without higher rework

Stable or improved candidate experience metrics (drop-off, reschedules)

Stable or improved fraud catch rate with controlled false positives

Reduced reviewer fatigue through risk-tiered routing

Ownership, automation, and sources of truth

Before you instrument anything, decide who owns which levers and which system is authoritative. Most CHRO-level reporting failures happen because no one owns the "in between" moments: waiting for review, chasing artifacts, or reconciling timestamps across tools. A clean model is: Recruiting Ops owns funnel instrumentation and SLAs, Security owns risk policy and audit requirements, Hiring Managers own interview feedback turnaround. Automation should handle routine verification and scheduling, while humans review only exceptions with clear queues and deadlines.

Recruiting Ops (Accountable): event taxonomy, stage definitions, dashboard cadence, SLA enforcement

Security/Compliance (Consulted/Approver): risk tiers, escalation rules, retention and access controls

TA Leaders (Responsible): adoption across teams, interviewer behavior, calibration on pass/fail criteria

Hiring Managers (Responsible): feedback SLAs, decision velocity, interview panel hygiene

ATS: canonical candidate record, stage transitions, offer outcomes

Verification service: identity decision, evidence references, risk signals

Interview and assessment layer: interview completion, proctoring signals, assessment scores and integrity flags

Data warehouse: immutable event log for reporting and audit narratives

What to measure across the complete hiring lifecycle

Use the IntegrityLens lifecycle as your measurement backbone: Source candidates - Verify identity - Run interviews - Assess - Offer. Each stage needs inputs, outputs, and a timestamped event trail. Avoid vanity metrics that can be gamed, like "average time-to-hire" without distribution, segmentation, or rework counts. For CHRO reporting, you want three layers: (1) speed, (2) quality and risk, (3) operational load. All three should be segmentable by role family, location, source channel, and remote vs onsite.

Time in stage (median and p90): Applied to Verified, Verified to First Interview, Interview to Assessment Complete, Assessment to Offer

Queue time vs touch time: waiting for review vs actual review duration

Rework rate: number of stage reversals or reschedules per candidate

Fraud flag rate by stage: identity mismatch, liveness anomalies, suspicious interview behavior, assessment integrity flags

Manual review rate: percent routed to human review (proxy for reviewer load)

False positive rate (operational definition): flags overturned after review or successful appeal

Evidence completeness: percent of hires with a complete Evidence Pack attached to the final decision

Reviewer fatigue indicators: open review queue size, SLA breach rate, average age of queue items

Interviewer latency: time from interview completion to feedback submitted

Context switching: number of systems used per decision (aim to reduce)

How to instrument it without breaking candidate experience

Instrumentation is easiest when you treat the pipeline as an event stream. Every meaningful action emits a normalized event with: candidate_id, stage, event_type, timestamp_utc, actor_type (system or human), decision (if any), and an evidence_reference (not raw biometrics). This is where teams lose data continuity: verification happens "over there," interviews happen "over there," and the ATS has a stage change with no explanation. Close that gap with a canonical event table and idempotent ingestion so retries do not create duplicate events.

Immutable append-only events (do not overwrite history)

Idempotent Webhooks or ingestion keys to prevent duplicates

Evidence references instead of sensitive payloads (support Zero-Retention Biometrics where possible)

Consistent time basis (UTC) and explicit stage boundary definitions

candidate.created, stage.entered, stage.exited

verification.started, verification.completed, verification.escalated

interview.scheduled, interview.completed, feedback.submitted

assessment.started, assessment.completed, assessment.flagged

offer.created, offer.accepted, offer.declined

A board-ready query: cycle time plus risk outcomes

Below is a practical SQL pattern you can hand to Recruiting Ops or your analytics partner. It produces stage-level cycle times and risk outcomes, segmented for leadership. It assumes an append-only event table and separates automated verification decisions from manual reviews.

Run weekly, segment by role_family and remote_flag

Review medians plus p90 to expose long-tail delays

Pair fraud flags with manual review load to prevent reviewer burnout

Step-by-step rollout plan you can actually execute

This is a sequencing problem. If you try to perfect taxonomy, integrations, dashboards, and policy in one sprint, you will stall. Roll out in layers: baseline, instrument, govern, then optimize. Keep the first month boring: one canonical timeline, a small set of metrics, and one weekly ritual. Then add risk tiering and Evidence Pack coverage once leadership can read the dashboard without a translator.

Write definitions for each stage start and end event (no ambiguity)

Set SLAs for manual review queues (example: "review within 4 business hours" as an internal target, not a public promise)

Agree on appeal flow for candidates flagged in error

Create a single event table in your warehouse (append-only)

Ingest ATS stage changes and interview/assessment completion events

Ingest verification decisions with evidence_reference pointers (not raw biometric artifacts)

Add idempotency keys so webhook retries do not double count

Dashboard page 1: time-to-hire distribution (median, p90) by role family

Dashboard page 2: fraud flags, manual reviews, false positives (overturns) trend

Dashboard page 3: queue health (SLA breaches, aging items), interviewer feedback latency

Set a weekly 30-minute funnel review with Recruiting Ops + TA + Security

Anti-patterns that make fraud worse

Measuring time-to-hire only from requisition open to offer accepted, which hides verification delays and creates incentives to bypass controls.

Treating fraud tooling as a side portal with no ATS stage impact, so recruiters keep moving candidates forward without seeing risk signals.

Routing every flag to the same manual reviewer pool with no risk tiering, which guarantees reviewer fatigue and inconsistent decisions.

How to report it to leadership without triggering defensiveness

Leadership reporting should answer: what changed, where time was saved, what risk was reduced, and what tradeoffs were managed. If your deck sounds like a vendor brochure, it will be challenged. If it reads like an incident postmortem with controls, it will be trusted. Use a single page narrative backed by a consistent dashboard: (1) speed, (2) integrity, (3) experience. Make it easy to see that you are not "buying speed" by tolerating more risk.

Speed: stage-level median and p90 movement, with top 2 bottlenecks

Integrity: flags, reviews, overturns (appeals), and the top 2 root causes

Experience: drop-off by stage and reschedule rate (spot friction early)

Governance: Evidence Pack coverage and any policy changes approved by Legal/Security

Do not celebrate lower cycle time if p90 got worse (long-tail pain drives reputation damage)

Do not show fraud numbers without defining detection criteria and review outcomes

Do not ask for more headcount without showing queue health and automation coverage

Where IntegrityLens fits

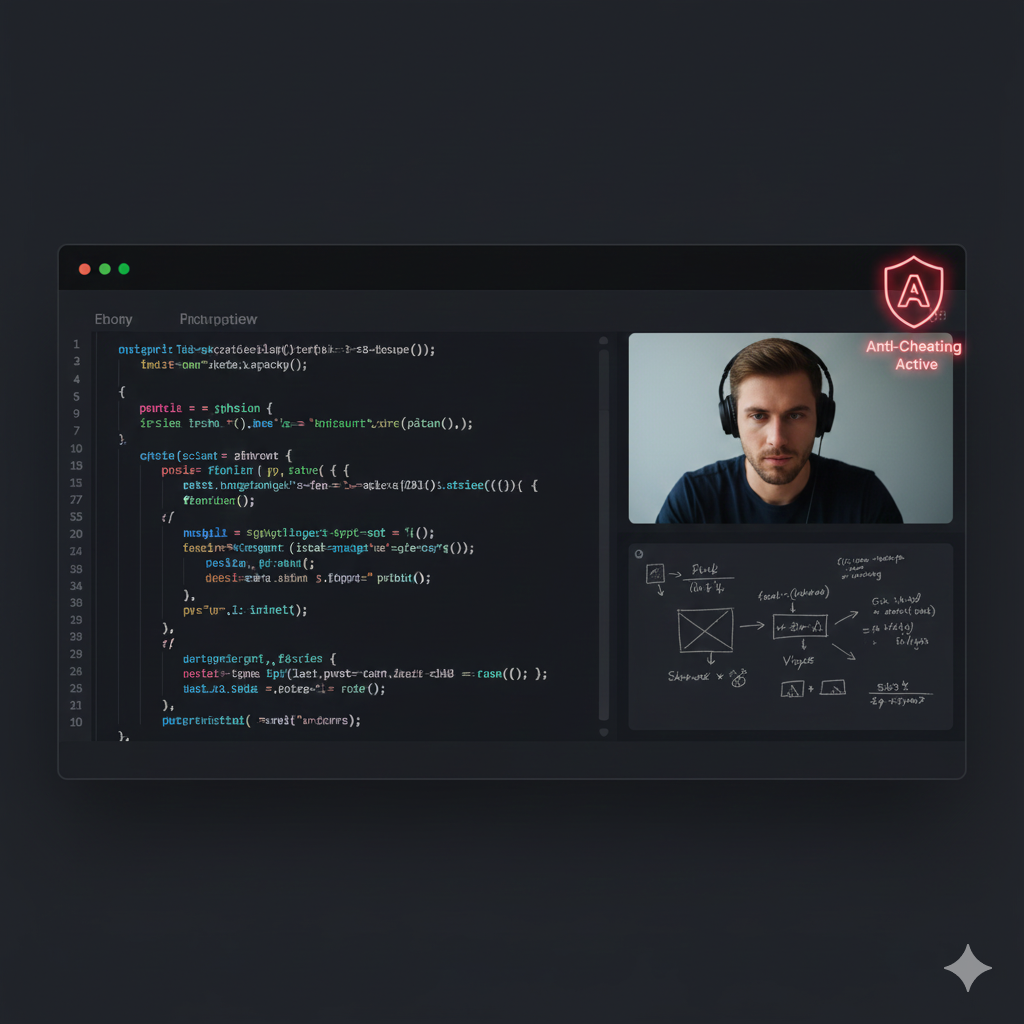

IntegrityLens AI is the first hiring pipeline that combines a full Applicant Tracking System with advanced biometric identity verification, AI screening, and technical assessments. For CHROs, this matters because instrumentation and reporting get easier when the funnel is one system of record, not five disconnected tools. IntegrityLens supports the complete lifecycle: Source candidates - Verify identity - Run interviews - Assess - Offer, with Risk-Tiered Verification and audit-ready Evidence Packs. TA leaders and recruiting ops teams use it to reduce funnel leakage and reviewer fatigue. CISOs use it to enforce consistent identity controls and keep decisions defensible.

ATS workflow with stage-level audit trails

Biometric identity verification (typical end-to-end verification time 2-3 minutes) with encryption baselines (256-bit AES)

24/7 AI screening interviews for consistent early-stage coverage

Technical assessments across 40+ programming languages

Unified reporting so risk signals follow the candidate through to offer

Sources

- Checkr, Hiring Hoax (Manager Survey, 2025): https://checkr.com/resources/articles/hiring-hoax-manager-survey-2025

Pindrop, hiring process as a cybersecurity vulnerability: https://www.pindrop.com/article/why-your-hiring-process-now-cybersecurity-vulnerability/

Related Resources

Key takeaways

- Treat time-to-hire as a chain of stage-level cycle times, not a single number that hides bottlenecks and risk handoffs.

- Instrument event-level telemetry (who, what, when, evidence) so speed improvements are defensible to Legal, Security, and the board.

- Report speed and fraud together: faster is only a win if false positives, candidate drop-off, and reviewer fatigue stay controlled.

- Create explicit ownership and SLAs for manual review so "waiting for someone to look" stops being your biggest hidden queue.

- Use Evidence Packs and risk tiers to explain decisions without over-collecting sensitive data.

This query calculates stage-level cycle time from event logs, plus verification and integrity outcomes. It is designed to support CHRO-level reporting: median and p90 cycle times, flag rates, manual review load, and overturns (appeals).

Assumes an append-only table `hiring_events` with: candidate_id, req_id, role_family, remote_flag, stage, event_type, timestamp_utc, actor_type, decision, risk_tier, evidence_ref, review_outcome.

WITH stage_events AS (

SELECT

candidate_id,

req_id,

role_family,

remote_flag,

stage,

event_type,

timestamp_utc,

LAG(timestamp_utc) OVER (

PARTITION BY candidate_id

ORDER BY timestamp_utc

) AS prev_ts,

LAG(stage) OVER (

PARTITION BY candidate_id

ORDER BY timestamp_utc

) AS prev_stage

FROM hiring_events

WHERE event_type IN ('stage.entered','stage.exited')

),

stage_durations AS (

SELECT

candidate_id,

req_id,

role_family,

remote_flag,

prev_stage AS stage_name,

TIMESTAMP_DIFF(timestamp_utc, prev_ts, MINUTE) AS minutes_in_stage

FROM stage_events

WHERE event_type = 'stage.entered'

AND prev_ts IS NOT NULL

AND prev_stage IS NOT NULL

),

risk_outcomes AS (

SELECT

candidate_id,

MAX(CASE WHEN event_type = 'verification.completed' THEN 1 ELSE 0 END) AS verified,

MAX(CASE WHEN event_type IN ('verification.escalated','assessment.flagged') THEN 1 ELSE 0 END) AS any_flag,

MAX(CASE WHEN actor_type = 'human' AND event_type IN ('verification.escalated','assessment.flagged') THEN 1 ELSE 0 END) AS manual_review_routed,

MAX(CASE WHEN review_outcome = 'overturned' THEN 1 ELSE 0 END) AS flag_overturned,

MAX(risk_tier) AS max_risk_tier

FROM hiring_events

GROUP BY candidate_id

)

SELECT

sd.role_family,

sd.remote_flag,

sd.stage_name,

APPROX_QUANTILES(sd.minutes_in_stage, 100)[OFFSET(50)] AS median_minutes_in_stage,

APPROX_QUANTILES(sd.minutes_in_stage, 100)[OFFSET(90)] AS p90_minutes_in_stage,

COUNT(DISTINCT sd.candidate_id) AS candidates,

SUM(ro.any_flag) / COUNT(DISTINCT sd.candidate_id) AS flag_rate,

SUM(ro.manual_review_routed) / COUNT(DISTINCT sd.candidate_id) AS manual_review_rate,

SAFE_DIVIDE(SUM(ro.flag_overturned), NULLIF(SUM(ro.manual_review_routed), 0)) AS overturn_rate_on_review

FROM stage_durations sd

LEFT JOIN risk_outcomes ro

ON ro.candidate_id = sd.candidate_id

WHERE sd.minutes_in_stage BETWEEN 0 AND 43200

GROUP BY 1,2,3

ORDER BY role_family, remote_flag, stage_name;Outcome proof: What changes

Before

Cycle time debates were based on blended averages and screenshots from multiple tools. Fraud signals were reviewed inconsistently and often after interviews, creating rework and awkward candidate callbacks. Manual review queues had no SLA and quietly became the largest bottleneck.

After

Leadership received a single stage-level view showing median and p90 time-in-stage alongside flag, review, and overturn trends. Risk-tier routing limited human review to exceptions, and Evidence Packs made decisions easier to defend during spot audits and candidate appeals.

Implementation checklist

- Define stage boundaries and a single time basis (UTC) for all pipeline events

- Create a canonical event taxonomy for source, verify, interview, assess, offer

- Add a risk tier and decision outcome to every candidate record

- Separate automated decisions from manual reviews with SLAs and queue metrics

- Publish a weekly leadership dashboard and a monthly audit-ready narrative

Questions we hear from teams

- What is the minimum I need to report to the CEO each month?

- Stage-level median and p90 time-to-hire, top bottleneck stage, fraud flag and manual review rates, overturn rate (fairness signal), and Evidence Pack coverage for hires.

- How do I avoid making candidates feel like suspects?

- Use risk-tiered verification and focus manual review on exceptions. Communicate that identity verification protects both the candidate and the company, and provide a clear appeal path for edge cases.

- What if Security wants stricter controls that slow hiring?

- Make the tradeoff explicit with data: show where queue time accumulates, separate automation from human review delays, and propose controls that increase signal quality without increasing friction (for example, earlier verification with evidence references and clear SLAs).

Ready to secure your hiring pipeline?

Let IntegrityLens help you verify identity, stop proxy interviews, and standardize screening from first touch to final offer.

Watch IntegrityLens in action

See how IntegrityLens verifies identity, detects proxy interviewing, and standardizes screening with AI interviews and coding assessments.