Verification Outcome Messages That Do Not Trigger Candidate Dropoff

Transparent, non-alarming verification outcome messaging is a control. Treat it like identity gating: clear states, explicit owners, immutable logs, and recovery paths that keep time-to-offer moving.

Outcome copy is not UX decoration. It is the candidate-facing surface area of your identity gate, and it must be logged like any other control.Back to all posts

A verification result comes back at 6:12 PM. Your team responds at 9:40 AM. What broke overnight?

Treat verification outcome messaging as a production incident, not a courtesy note. When a candidate gets a vague "we could not verify you" message, you create three risks at once: funnel leakage, shadow workflows, and an audit gap. Operationally, the failure shows up as time-to-offer slipping because work stops at the identity gate. Recruiters then bypass controls to hit hiring manager SLAs, typically by pushing candidates into interviews anyway or collecting identity documents over email. That is a compliance gap disguised as urgency. Legally, the exposure is simple: if Legal asked you to prove who approved this candidate, can you retrieve it? A decision without evidence is not audit-ready. Messaging that does not map to a logged state creates the worst kind of ambiguity: you cannot show what the candidate was told, when they were told, and what recovery path you offered. Fraud risk makes the stakes higher. Checkr reports that 31 percent of hiring managers say they have interviewed a candidate who later turned out to be using a false identity. If your outcome copy triggers abandonment for legitimate candidates while giving attackers a roadmap, you pay twice: conversion loss plus a higher fraud success rate. Cost compounds when a mis-hire slips through. SHRM estimates replacement cost can be 50 to 200 percent of annual salary, role-dependent. The most expensive failures often start with one unlogged, improvised message that pushed identity gating out of sequence.

Why do legacy tools fail at outcome communication? Because they do not treat it as a controlled state machine.

Most ATS, background check flows, and coding challenge tools treat verification outcomes as notifications, not as workflow states. The market failed to solve this because the tools are optimized for their own step, not for an end-to-end, audit-defensible pipeline. Here is what breaks in practice: - Sequential checks slow everything down because each vendor waits for the prior step to finish. Candidates experience dead time, not progress. - No unified immutable event log. You get emails, PDFs, and screenshots instead of a tamper-resistant timeline tied to the candidate record. - No evidence packs. Reviewers cannot see the full chain: what signal fired, what message was sent, who reviewed, and what override occurred. - No review-bound SLAs. Manual queues become unbounded, and delays cluster at moments where identity is unverified. - No standardized rubric storage for exceptions. Hiring managers approve "just this once" via chat, and that becomes an integrity liability. In short: shadow workflows are integrity liabilities. If it is not logged, it is not defensible.

Who owns verification outcomes, reviews, and messaging? Make it explicit or it will default to whoever is online.

Recommendation: define a simple RACI that matches how incidents get resolved in the real world. Ownership model: - Recruiting Ops owns the workflow design, candidate-facing copy, queue operations, and SLA enforcement. They are accountable for time-to-event and completion rate. - Security owns identity policy thresholds, access control, exception criteria, and audit policy. They are accountable for fraud exposure and evidence sufficiency. - Hiring Manager owns rubric discipline and downstream decisions, but does not own identity exceptions. They are accountable for evidence-based scoring once identity is gated. Automation versus manual review: - Automated: outcome classification, candidate messaging dispatch, ATS write-back, evidence pack creation, queue routing, and access expiration by default. - Manual: exception review for mismatches, document unreadable cases, accessibility fallbacks, and suspected deepfake or proxy signals. Sources of truth: - ATS is the system of record for candidate status and communications history. - Verification service is the system of record for raw verification signals and reason codes. - Evidence pack is the audit artifact that binds both together with timestamps, reviewer notes, and approvals.

A named queue owner per region and business day.

A review clock per state (for example, "manual review started" and "manual review decided").

An override policy that requires a reason code and approver identity.

What is the modern operating model for verification outcomes? Instrument the messages like control-plane events.

Recommendation: implement verification outcomes as a state machine with event-based triggers and standardized recovery paths. Instrumented workflow requirements: - Identity verification before access. The candidate does not enter interviews or assessments until identity is gated, or an explicit exception is logged. - Event-based triggers. Each outcome emits a timestamped event that routes to the correct queue and sends the correct copy template. - Automated evidence capture. Every message sent is recorded with template ID, channel, timestamp, and delivery status. - Analytics dashboards. Track time-to-event by state, abandonment by step, and manual review SLA breaches. - Standardized rubrics. Exception decisions require structured notes and a reason code, not free text in chat. Outcome copy becomes part of the control. It should do four things every time: name the state, explain the next step, give a review clock, and provide a fallback path that supports accessibility and device failures.

Be specific about the process, not the signal. Say "We need a manual review" rather than "Your face did not match."

Always provide a recovery path that is logged (retry, alternate method, or manual review).

Do not disclose fraud heuristics or thresholds. Avoid teaching attackers how to tune attempts.

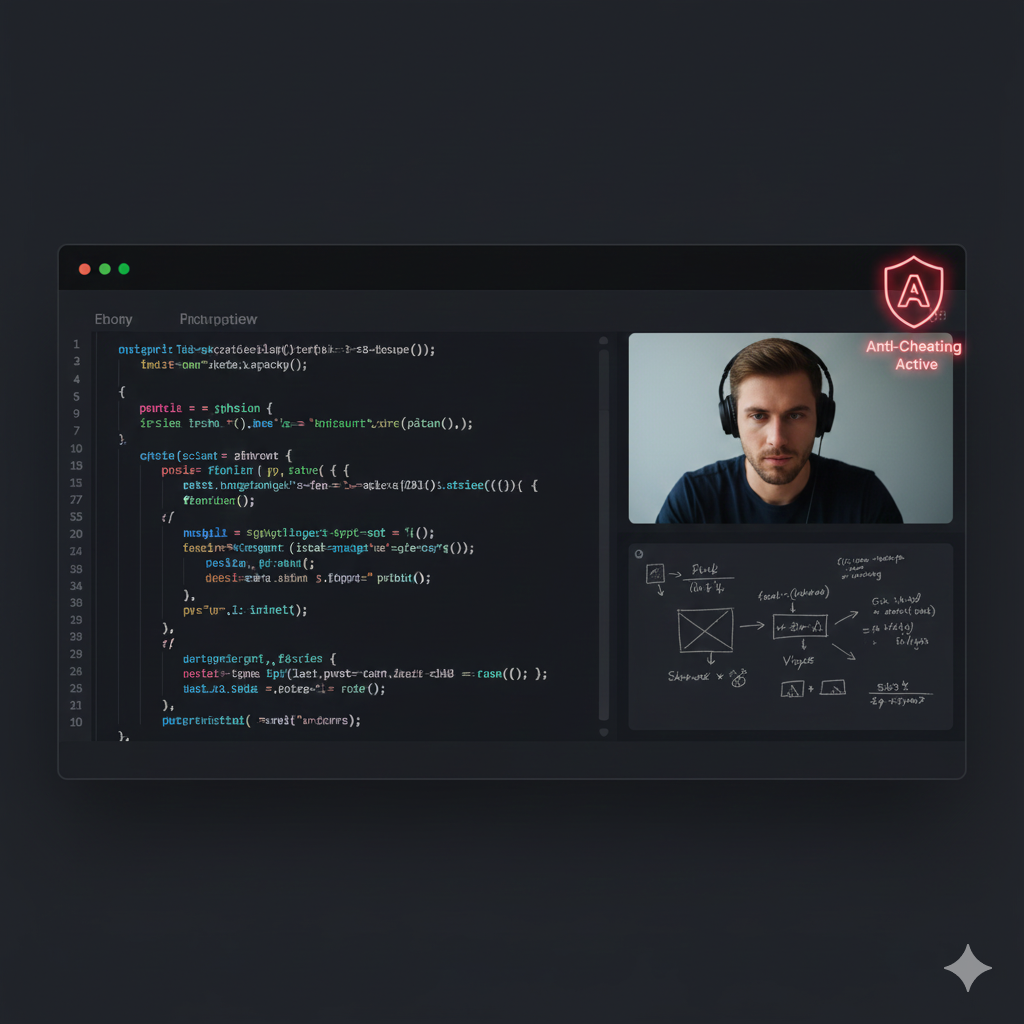

Where IntegrityLens fits in this workflow

IntegrityLens AI acts as the control layer between Recruiting Ops speed requirements and Security audit requirements, while keeping the ATS as the single source of truth. - Runs biometric identity verification (liveness, face match, document auth) as an identity gate before interview or assessment access. - Writes immutable, timestamped verification events back into the ATS so status and messaging are audit-reconstructable. - Produces evidence packs with reviewer notes and decision provenance, so exceptions are defensible under Legal review. - Supports fraud prevention signals (deepfake and proxy interview detection) without exposing sensitive heuristics in candidate copy. - Uses zero-retention biometrics architecture for operational control over sensitive data handling.

What makes fraud worse? These three anti-patterns

- Using ambiguous outcomes like "failed" or "flagged" without a logged reason code and recovery path. This increases abandonment and invites shadow bypasses. - Allowing hiring managers to approve identity exceptions over chat or email. Manual review without evidence creates audit liabilities. - Letting candidates proceed to interviews while "verification is pending" with no access expiration by default. You are granting privileged access before identity is gated.

Implementation runbook: outcome states, SLAs, owners, and what gets logged

Define status taxonomy (same day) - Owner: Recruiting Ops (workflow), Security (policy), Legal (language review) - SLA: 4 business hours to finalize templates - Evidence: template IDs, version, approval timestamps, and policy owner

Outcome = Verified (pass) - Owner: System automated, Recruiting Ops monitors - SLA: immediate message dispatch after verification event - Candidate copy principle: "You are cleared to proceed" plus what happens next and when - Logged: verification_completed, status=verified, timestamp, message_sent(template=VERIFIED_V1)

Outcome = Action required (retry) - Owner: System automated, Recruiting Ops monitors completion - SLA: dispatch within 1 minute; retry window 24 hours - Candidate copy principle: blame the process, not the person. Offer retry link and support - Logged: verification_retry_requested, retry_count, delivery status, device metadata (non-sensitive)

Outcome = Manual review (inconclusive) - Owner: Security review queue, Recruiting Ops queue ops - SLA: review start within 4 business hours; decision within 1 business day - Candidate copy principle: set the clock. "A specialist is reviewing" and provide what not to do (do not resubmit repeatedly) - Logged: manual_review_queued, reviewer_assigned, review_started, review_decided, reviewer notes

Outcome = Unable to verify today (fallback required) - Owner: Recruiting Ops for candidate support, Security for alternate method approval - SLA: candidate response within 4 business hours; alternate verification scheduled within 1 business day - Candidate copy principle: accessibility-first. Offer an alternate path (assisted session or document rescan) - Logged: accessibility_fallback_offered, candidate_selected_path, support_ticket_id

Outcome = Not proceeding (policy decision) - Owner: Security recommends, Recruiting Ops executes, Legal defines retention and wording - SLA: decision logged before message; message within 30 minutes of decision - Candidate copy principle: final but non-accusatory. Do not allege fraud. Provide appeal channel if policy allows - Logged: decision_finalized, approver_id, reason_code, message_sent(template=NOT_PROCEEDING_V1), retention_policy_applied Operational metrics to put on a weekly dashboard: - Time-to-first-message by outcome state (p50, p90) - Manual review SLA breach rate - Verification completion rate and retry success rate - Candidate support tickets per 100 verifications (segmented by device and region) - Offer-stage fallout where verification was not completed before interviews (should trend to zero)

Verified: "Verification complete. Your next step is [interview/assessment]. You will receive details within [time]."

Retry: "We could not complete verification due to a technical issue. Please retry using this link. It typically takes 2-3 minutes."

Manual review: "Your verification is in manual review. You do not need to take action. We will update you within 1 business day."

Fallback: "If you cannot complete this on your device, choose an alternate option. We support assisted review."

Not proceeding: "We are unable to move forward at this time based on our verification policy. If you believe this is an error, contact [channel]."

Related Resources

Key takeaways

- Outcome messaging is part of the control plane: define explicit status states, owners, and SLAs or you will create shadow workflows and audit gaps.

- Non-alarming copy reduces fallout by explaining the reason for friction, the exact next step, and the review clock, then logging that communication in the ATS.

- Every verification outcome must write an immutable event to the evidence pack: status, timestamp, reason code, and who can override.

- Accessibility is not optional: provide a fallback path (manual review or alternative verification) and log why it was invoked.

Use this as the single source of truth for outcomes, owners, SLAs, and which copy template is allowed per state.

Store this policy in a controlled repo, version it, and require Security and Legal approvals for changes.

policy_name: verification-outcome-messaging

version: 1.0

owners:

recruiting_ops: "vp-talent-ops"

security: "security-lead"

legal: "privacy-counsel"

channels:

- email

- sms

- in_app

states:

verified:

candidate_facing_label: "Verification complete"

allowed_templates: ["VERIFIED_V1"]

sla:

message_dispatch: "immediate"

routing:

next_step: "unlock_interview_and_assessment"

logging:

events: ["verification_completed", "message_sent"]

required_fields: ["timestamp", "template_id", "delivery_status", "candidate_id"]

action_required_retry:

candidate_facing_label: "Action required"

allowed_templates: ["RETRY_V1"]

sla:

message_dispatch: "<1m"

retry_window: "24h"

routing:

next_step: "reverify"

logging:

events: ["verification_retry_requested", "message_sent"]

required_fields: ["timestamp", "retry_count", "template_id", "delivery_status"]

manual_review:

candidate_facing_label: "In manual review"

allowed_templates: ["MANUAL_REVIEW_V1"]

sla:

review_start: "<4_business_hours"

decision: "<1_business_day"

routing:

queue: "security-manual-review"

logging:

events: ["manual_review_queued", "review_started", "review_decided", "message_sent"]

required_fields: ["timestamp", "reviewer_id", "decision", "reason_code", "notes"]

fallback_required:

candidate_facing_label: "Alternate verification available"

allowed_templates: ["FALLBACK_V1"]

sla:

candidate_response: "<4_business_hours"

alternate_scheduled: "<1_business_day"

routing:

queue: "recruiting-ops-support"

logging:

events: ["accessibility_fallback_offered", "fallback_selected", "message_sent"]

required_fields: ["timestamp", "support_ticket_id", "selected_path"]

not_proceeding:

candidate_facing_label: "Unable to proceed"

allowed_templates: ["NOT_PROCEEDING_V1"]

sla:

message_dispatch: "<30m_after_decision"

routing:

next_step: "close_candidate"

logging:

events: ["decision_finalized", "message_sent", "retention_policy_applied"]

required_fields: ["timestamp", "approver_id", "reason_code", "template_id"]

controls:

override_policy:

allowed: true

requires:

- "approver_id"

- "reason_code"

- "time_bound_expiration"

accessibility:

wcag_target: "WCAG 2.1"

required_fallback_paths: ["assisted_session", "manual_review"]

audit:

statement: "If it is not logged, it is not defensible. All outbound messages must be ATS-anchored."Outcome proof: What changes

Before

Verification outcomes were communicated via ad hoc email by recruiters. Manual review had no SLA, and exceptions were approved in chat. When candidates asked what happened, responses varied by recruiter and region.

After

Recruiting Ops standardized five verification outcome states with approved templates, instrumented SLAs, and ATS-anchored message logging. Security took ownership of the manual review queue with a defined review clock and required reason codes for overrides.

Implementation checklist

- Define verification states and reason codes that are candidate-safe but audit-meaningful.

- Assign owners per state (Recruiting Ops, Security, Hiring Manager) and set review-bound SLAs.

- Ship copy for each state with: what happened, what happens next, how long it takes, and how to get help.

- Log every outbound message as an ATS-anchored event with timestamp and template ID.

- Implement an accessibility and device-failure fallback path with a manual review queue.

- Measure time-to-event per state and candidate completion rate per step.

Questions we hear from teams

- How transparent should we be when verification is inconclusive?

- Be transparent about the process and timeline, not the underlying fraud heuristics. Say it is in manual review, provide a review clock, and log the state transition and message template ID in the ATS-anchored audit trail.

- Do we need different copy for suspected fraud versus technical failure?

- Internally yes, externally no. Use different internal reason codes and routing, but keep candidate-facing language non-accusatory and focused on next steps to avoid defamation risk and to prevent teaching attackers how to adapt.

- What is the minimum logging we need for defensibility?

- At minimum: outcome state, timestamp, message template ID, delivery status, reviewer or approver identity for any manual decision, and a structured reason code stored in an immutable evidence pack tied to the candidate record.

- How do we keep speed while adding manual review?

- Use review-bound SLAs with queue ownership, and parallelize what you can. The operational goal is to prevent unbounded waiting by making review start and decision times measurable and enforced.

Ready to secure your hiring pipeline?

Let IntegrityLens help you verify identity, stop proxy interviews, and standardize screening from first touch to final offer.

Watch IntegrityLens in action

See how IntegrityLens verifies identity, detects proxy interviewing, and standardizes screening with AI interviews and coding assessments.