Stop Identity Farming in Remote Hiring Without Slowing Support

Identity farming turns your assessment funnel into a shared account: one verified person completes tests and interviews for dozens of candidates. This playbook shows how to detect it early, escalate verification only when risk signals justify it, and keep your candidate experience fast and defensible.

Treat verification as a continuous state tied to sessions, not a checkbox tied to an account.Back to all posts

The day your top client asks who actually took the test

A Tier 1 customer escalates: the new hire cannot complete basic tasks, but their assessment score was near-perfect. Your Support queue fills with a second wave: candidates saying their accounts were "flagged for no reason" after finishing an assessment. In the war room, you realize the same face passed verification, then dozens of different candidate profiles submitted tests from the same device pattern over two weeks. This is identity farming: one verified person repeatedly taking assessments and screens for many others. If you treat identity as a one-time gate, you will miss it. If you add heavy checks for everyone, you will burn candidate goodwill and still have gaps. By the end of this playbook, you will have a step-up design that catches repeat test takers, minimizes false positives, and gives your team a clean Evidence Pack to resolve appeals fast.

Why identity farming slips through "verified once" pipelines

Recommendation: assume the attacker can pass a single verification once, then optimize for detecting reuse across sessions. Identity farming thrives because hiring workflows have long, unobserved gaps. A candidate verifies identity on Monday, then takes assessments on Wednesday, then joins an interview on Friday. If those steps are not cryptographically and operationally linked to the same verified person, you are trusting an account, not a human. Use passive signals first (device, network, behavior) to detect suspicious reuse without adding friction. Then step up to stronger checks only when the signals indicate a shared operator behind many profiles.

31% of hiring managers say they have interviewed someone who later turned out to be using a false identity. This implies the problem is not edge-case and that interview stages are a common failure point. It does not prove your org will see the same rate, since the survey scope and role mix may differ.

Pindrop reported 1 in 6 applicants to remote roles showed signs of fraud in one real-world pipeline. This suggests remote funnels concentrate attack volume. It does not prove all industries face the same baseline, and "signs of fraud" is broader than confirmed identity farming.

Ownership, automation, and sources of truth

Recommendation: make Recruiting Ops the process owner, Security the policy approver, and Support the escalation owner with clear SLAs. If ownership is vague, identity farming turns into an endless loop of "Recruiting says Security blocked it" and "Security says Recruiting approved it" while Support absorbs candidate and client frustration. Sources of truth must be explicit: the ATS is the system of record for status and decisions, the verification service is the system of record for identity events, and the interview and assessment systems are the system of record for session telemetry. Your architecture should write back a single, readable verification state into the ATS so downstream teams stop making judgment calls off screenshots.

Recruiting Ops owns workflow: thresholds, step-up points, candidate messaging templates.

Security owns controls: acceptable signals, retention rules, access controls, audit readiness.

Hiring Managers consume outcomes: they do not run investigations, they follow "verified / step-up required" gates.

Support and CS own escalations: appeal intake, Evidence Pack delivery, and time-bound resolution.

Automated: passive signal scoring, duplicate pattern detection, step-up triggers, webhook logging, Evidence Pack assembly.

Manual: only the exception queue (borderline risk, repeated failures, accessibility issues, high-value roles).

ATS: candidate identity state, step-up required flag, final disposition, immutable decision notes.

Verification layer: document + voice + face result, liveness outcome, confidence, timestamps.

Assessment and interview layers: session IDs, device fingerprints, network hints, proctoring anomalies.

Risk-tiered controls that stop repeat test takers

Recommendation: implement three tiers and make escalation idempotent so retries do not create data chaos. Tier 0 (baseline): passive signals only. Tier 1 (light step-up): selfie liveness and session binding before assessment start. Tier 2 (strong): document + voice + face verification before interview start and before offer. IntegrityLens can verify identity in under three minutes in typical end-to-end flows (document + voice + face), which makes strong checks viable when triggered, not blanket. Tune your thresholds around two operator outcomes: reduce false positives (legit candidates wrongly stepped up) and reduce false negatives (ringers not caught). Start conservative, then tighten based on exception queue volume and confirmed fraud outcomes.

Device reuse across many candidate profiles in a short window (same hardware and browser traits).

Network clustering: repeated submissions from the same ASN, VPN exit nodes, or impossible travel patterns.

Behavioral consistency: unusually fast completion times, copy-paste spikes, repeated identical keystroke rhythm signatures (if available).

Assessment start: if device or network risk score crosses threshold, require liveness check to bind the session to a person.

Interview join: if the face does not match prior verification, require re-verification before the interview proceeds.

Offer stage: if any prior step-up was triggered, require strong verification again to re-establish chain-of-custody.

If ID scan fails: offer alternate document types and assisted capture with a timed link.

If liveness fails: allow a single retry, then route to a short live verification slot.

If accessibility constraints: provide a documented exception path approved by Security, still producing an Evidence Pack.

A policy you can hand to Recruiting Ops and Security

Recommendation: codify triggers, SLAs, and Evidence Pack requirements in a single config so changes are deliberate and reviewable. The example below is a realistic policy configuration for detecting identity farming via shared device patterns and enforcing step-up verification at assessment and interview boundaries.

How Support resolves an appeal in minutes, not days

Recommendation: Support should never "investigate from scratch". They should retrieve a standardized Evidence Pack attached to the ATS record. When a candidate appeals a step-up or rejection, Support opens the ATS record and pulls the Evidence Pack: verification events, session IDs, passive signal summary, and the exact policy rule that fired. If the decision stands, Support responds with policy-based language and an offer to retry verification. If it was a false positive, Support can authorize a controlled override with attribution, preserving auditability.

Verification timeline: timestamps for document, face, voice, liveness, and re-verification events.

Session binding: which verified identity was bound to which assessment and interview session IDs.

Passive signals summary: device reuse count, network risk flags, anomaly highlights (no raw toxic data).

Decision log: rule ID, threshold crossed, reviewer actions, and candidate communications.

Anti-patterns that make fraud worse

Recommendation: eliminate these three behaviors first, because they increase both fraud and support load.

Using a single, one-time ID check at application and never re-binding identity to assessment and interview sessions.

Letting recruiters override flags in chat or email without an immutable log and without attaching evidence to the ATS record.

Applying heavy verification to every candidate by default, which increases drop-off and trains attackers to optimize around a predictable checkpoint.

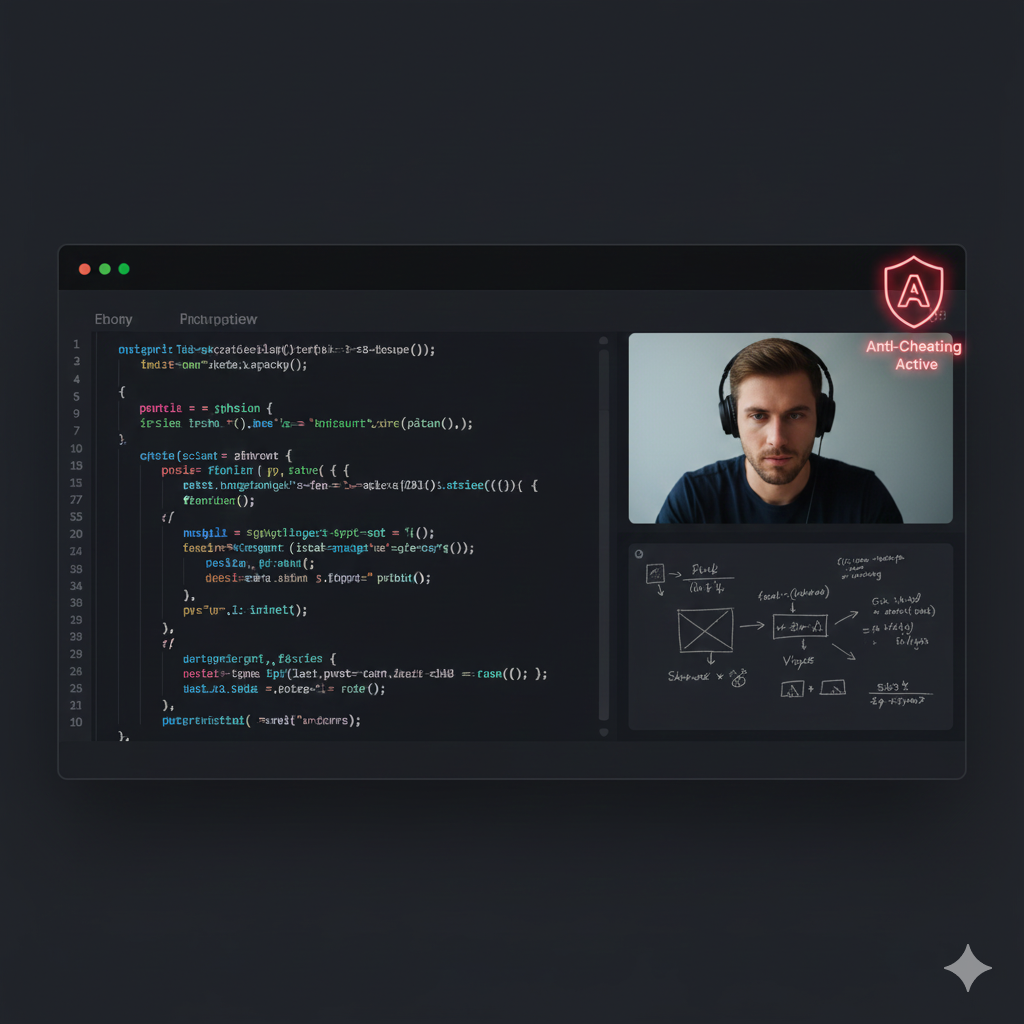

Where IntegrityLens fits

IntegrityLens AI is the first hiring pipeline that combines a full Applicant Tracking System with advanced biometric identity verification, AI screening, and technical assessments, so identity farming is addressed inside one defensible workflow. Teams use it to keep verification fast, step-up checks targeted, and evidence centralized for appeals and audits. IntegrityLens supports: - ATS workflow from source to offer, with verification state written into the record - Biometric identity verification (document + voice + face) with typical 2-3 minute completion and under-three-minute verification before interviews when triggered - Fraud detection using passive signals to drive Risk-Tiered Verification and reduce reviewer fatigue - 24/7 AI screening interviews and 40+ language coding assessments tied to verified sessions - Evidence Packs and idempotent webhooks for clean integrations used by TA leaders, recruiting ops, and CISOs

Operator runbook to deploy this in two sprints

Recommendation: ship the detection and step-up loop first, then optimize thresholds based on observed exceptions. Sprint 1: instrument passive signals, define risk tiers, and enforce liveness at assessment start for high-risk sessions. Sprint 2: add interview join re-verification, Evidence Pack automation, and a formal appeal workflow with SLAs. Keep your metrics operational: exception queue volume, median time-to-resolution for appeals, and the percentage of sessions successfully bound to a verified identity. Avoid vanity metrics and focus on whether Support can close the loop without escalation.

Define policy: triggers, thresholds, and which stage requires which tier.

Wire event flow: assessment start, interview join, offer stage all emit events to the verification policy engine.

Enable idempotent webhooks: retries must not create duplicate verification states or conflicting logs.

Stand up an exception queue: one place for manual review with templates and SLAs.

Deploy candidate messaging: short, respectful explanations and a clear retry path.

Review weekly: tune thresholds based on confirmed fraud and false positives, then document changes.

Sources

- Checkr (2025): Hiring Hoax (Manager Survey, 2025) https://checkr.com/resources/articles/hiring-hoax-manager-survey-2025

Pindrop: Why your hiring process is now a cybersecurity vulnerability https://www.pindrop.com/article/why-your-hiring-process-now-cybersecurity-vulnerability/

Related Resources

Key takeaways

- Identity farming is best stopped with continuous verification state, not a single one-time ID check.

- Lead with passive signals (device, network, behavior) to avoid adding friction to every candidate.

- Use step-up verification triggers that are explicit, logged, and idempotent so Support can defend decisions under appeal.

- Design fallbacks for ID scan failures so legitimate candidates do not get trapped in limbo.

- Write every decision into an Evidence Pack attached to the ATS record to reduce reviewer fatigue and escalations.

Use passive signals to detect shared operators across many candidate profiles.

Escalate to liveness or strong verification only when risk crosses defined thresholds.

Write every trigger and outcome into an Evidence Pack attached to the ATS record.

```yaml

policyId: identity-farming-stepup-v1

version: 1

owner:

recruitingOps: "recruiting-ops@company.com"

securityApprover: "security@company.com"

supportEscalation: "cs-escalations@company.com"

verificationTiers:

tier0_passive:

description: "No candidate friction. Collect passive signals and score risk."

tier1_liveness:

description: "Selfie liveness + session binding before assessment or interview."

slaMinutes: 10

tier2_strong:

description: "Document + face + voice verification before interview start and before offer."

expectedDurationMinutes: "2-3 (typical)"

passiveSignals:

deviceReuse:

windowHours: 72

highRiskProfilesPerDevice: 5

networkRisk:

flags: ["vpn-exit", "datacenter-ip", "high-risk-asn", "geo-impossible-travel"]

behaviorAnomalies:

flags: ["assessment-too-fast", "copy-paste-spike", "tab-switching-high"]

riskScoring:

weights:

deviceReuseHigh: 50

networkRiskFlag: 20

behaviorAnomalyFlag: 15

thresholds:

stepUpToTier1: 55

stepUpToTier2: 80

stageRules:

- stage: "assessment_start"

actions:

- ifRiskScoreGte: 55

requireTier: "tier1_liveness"

bindSessionToVerifiedPerson: true

- ifRiskScoreGte: 80

requireTier: "tier2_strong"

bindSessionToVerifiedPerson: true

- stage: "interview_join"

actions:

- ifPriorStepUpTriggered: true

requireTier: "tier2_strong"

- ifFaceMismatchDetected: true

requireTier: "tier2_strong"

- stage: "offer_ready"

actions:

- ifAnyRiskSignals: true

requireTier: "tier2_strong"

fallbacks:

idScanFailure:

allowAlternateDocs: true

assistedCaptureEnabled: true

maxRetries: 2

livenessFailure:

maxRetries: 1

routeToLiveCheck: true

logging:

writeEvidencePackToATS: true

includeFields:

- "policyId"

- "ruleTriggered"

- "riskScore"

- "signalSummary"

- "verificationTierRequired"

- "verificationOutcome"

- "sessionIds"

idempotencyKey: "${candidateId}:${stage}:${sessionId}:${policyId}"

appeals:

enabled: true

supportSlaHours: 24

allowOneFreeReverify: true

requireReviewerAttribution: true

```Outcome proof: What changes

Before

Assessments were treated as trusted once a candidate passed an initial identity check. Support handled repeated disputes with little shared context, and recruiters manually stitched together screenshots from multiple tools.

After

Verification became a continuous state tied to assessment and interview sessions, with risk-tiered step-ups and standardized Evidence Packs written into the ATS record. Support used the Evidence Pack to resolve appeals consistently without ad hoc investigations.

Implementation checklist

- Define what counts as a re-verification trigger (device, network, behavior, assessment anomalies).

- Implement risk-tiered step-ups: none, light (selfie liveness), strong (doc + voice + face).

- Add an appeal path with SLA and clear candidate messaging.

- Instrument webhooks so every verification state change is logged and idempotent.

- Create a fallback path for scan failures (alternate doc, assisted capture, scheduled live check).

- Require re-verification at high-risk transitions: before interview start and before offer.

Questions we hear from teams

- What is identity farming in hiring?

- Identity farming is a fraud pattern where one real person repeatedly completes assessments or interviews for many candidate profiles, using shared devices, networks, or coached session handoffs.

- Why are passive signals the first line of defense?

- Passive signals (device, network, behavior) catch reuse patterns with near-zero candidate friction, letting you reserve step-up verification for the small slice of sessions that look shared or scripted.

- How do you reduce false positives when blocking suspected ringers?

- Use explicit thresholds, provide a fast fallback path (retry or assisted capture), and require the Evidence Pack to show which signals fired. Then tune thresholds based on confirmed outcomes, not anecdotes.

- When should you re-verify if someone already passed identity?

- Re-verify at transitions where a different person can take over: assessment start, interview join, and before offer. The purpose is session binding, not repeating paperwork.

Ready to secure your hiring pipeline?

Let IntegrityLens help you verify identity, stop proxy interviews, and standardize screening from first touch to final offer.

Watch IntegrityLens in action

See how IntegrityLens verifies identity, detects proxy interviewing, and standardizes screening with AI interviews and coding assessments.