Generative AI in Coding Tests: Policy That Keeps Speed, Cuts Risk

A pragmatic playbook for RevOps teams to keep assessment speed while separating modern AI-enabled productivity from proxy work and identity fraud.

Allow GenAI as a tool, then demand proof-of-work. The operator move is not banning efficiency, it is making authorship auditable.Back to all posts

Friday offer pressure meets a too-perfect submission

A RevOps leader feels this one in the forecast: you need speed, but you cannot afford a public hiring miss. The candidate submits a near-production-quality solution suspiciously fast, then struggles to explain tradeoffs. The team is split between "GenAI is normal" and "this is cheating." By the end of this article, you will be able to draw a bright operational line between acceptable GenAI-enabled efficiency and integrity failures like proxy work, identity fraud, or undisclosed external assistance, without adding blanket friction to every candidate.

Why this question is really about revenue protection

Cost: false positives create funnel leakage and re-sourcing costs.

Risk: false negatives create downstream delivery issues, customer churn risk, and internal credibility loss. One approved signal of scale: 31% of hiring managers report they have interviewed a candidate who later turned out to be using a false identity (Checkr, 2025). Directionally, that implies identity risk is not rare and should be instrumented early. It does not prove your company or function has the same rate, and it does not isolate GenAI use from identity fraud.

Stop asking: "Did they use AI?"

Start asking: "Can we trust the work product was created by this verified candidate, under our rules, with reproducible evidence?"

Define "allowed GenAI" with boundaries that create evidence

A modern policy that hires strong operators will usually allow GenAI for coding assessments, with constraints. The goal is not to ban tools. The goal is to prevent undisclosed delegation and preserve signal quality. Use three buckets:

Allowed: using GenAI like an IDE copilot to scaffold, refactor, or recall syntax, with disclosure.

Allowed with proof: using GenAI to draft an approach, but candidate must provide reasoning, tests, and a short walk-through.

Disallowed: a different human (or paid service) producing work, model-to-model prompting by someone else, or using the tool to bypass the intent of the assessment (for example, pasting the entire prompt into a service that returns a complete solution with no understanding).

A short design note: assumptions, tradeoffs, and what they would change with more time.

A minimal test suite that proves correct behavior.

A 5-8 minute recorded or live explanation of 2 key decisions, using their own words.

A disclosure checkbox: "I used GenAI for X" with optional free text.

Risk-tiered verification for GenAI-era assessments

Session discontinuity: different device fingerprints between verify step and assessment attempt.

Timing anomalies: extremely low active time paired with high output complexity.

Interaction anomalies: large paste events with no intermediate edits, or sudden jumps in code quality.

Explanation gap: cannot reproduce the approach or adjust code live when asked to change a requirement. Actioning signals: treat them like credit card fraud ops. Route to a queue, step up verification, and ask for a small live modification task that is hard to outsource in real time.

Low risk: accept submission, proceed to interview with normal rubric.

Medium risk: require a 10-minute live walk-through and one small change request.

High risk: re-verify identity before any live interview, then run an AI screening interview focused on reasoning; only invalidate with documented evidence.

A policy you can actually run in ops

The fastest way to operationalize this is to turn it into policy-as-code that drives routing, not vibes. Below is an example YAML policy that classifies GenAI usage and integrity signals into actions and Evidence Pack requirements.

Implementation steps that preserve speed

Sales engineering and RevOps analytics roles often benefit from tool realism. Permit GenAI with disclosure, then evaluate explanation and reproducibility.

For roles requiring deep algorithmic skill, you can tighten allowed usage, but avoid trick questions that mostly test prompt crafting. 2) Redesign the assessment to produce verifiable artifacts

Require tests, a short design note, and a change request that must be applied after submission (forces understanding). 3) Instrument integrity signals and route automatically

Create a review queue only for medium and high risk. Most candidates should never hit manual review. 4) Add a short, structured follow-up

Use a consistent set of questions: "Why this approach?" "What are failure modes?" "Change requirement X, what breaks?" This is where proxy work collapses. 5) Store a defensible Evidence Pack in the ATS

Policy version, identity verification result, telemetry summary, reviewer notes, and final disposition. This is what protects you in audits and internal escalations.

Queue volume by risk tier (watch for reviewer fatigue).

False positive rate proxies: percent of step-ups that clear cleanly (if too high, your signals are noisy).

Time-to-offer impact: median delay introduced by step-ups.

Appeal rate and overturn rate (quality control for your process).

Anti-patterns that make fraud worse

- Zero-tolerance bans on GenAI that force candidates to hide normal workflows and push fraud into harder-to-detect channels. - Unstructured reviewer discretion with no rubric, which creates inconsistent decisions and audit findings. - Delaying identity verification until late stages, which wastes interviewer time on candidates you cannot confidently bind to an identity.

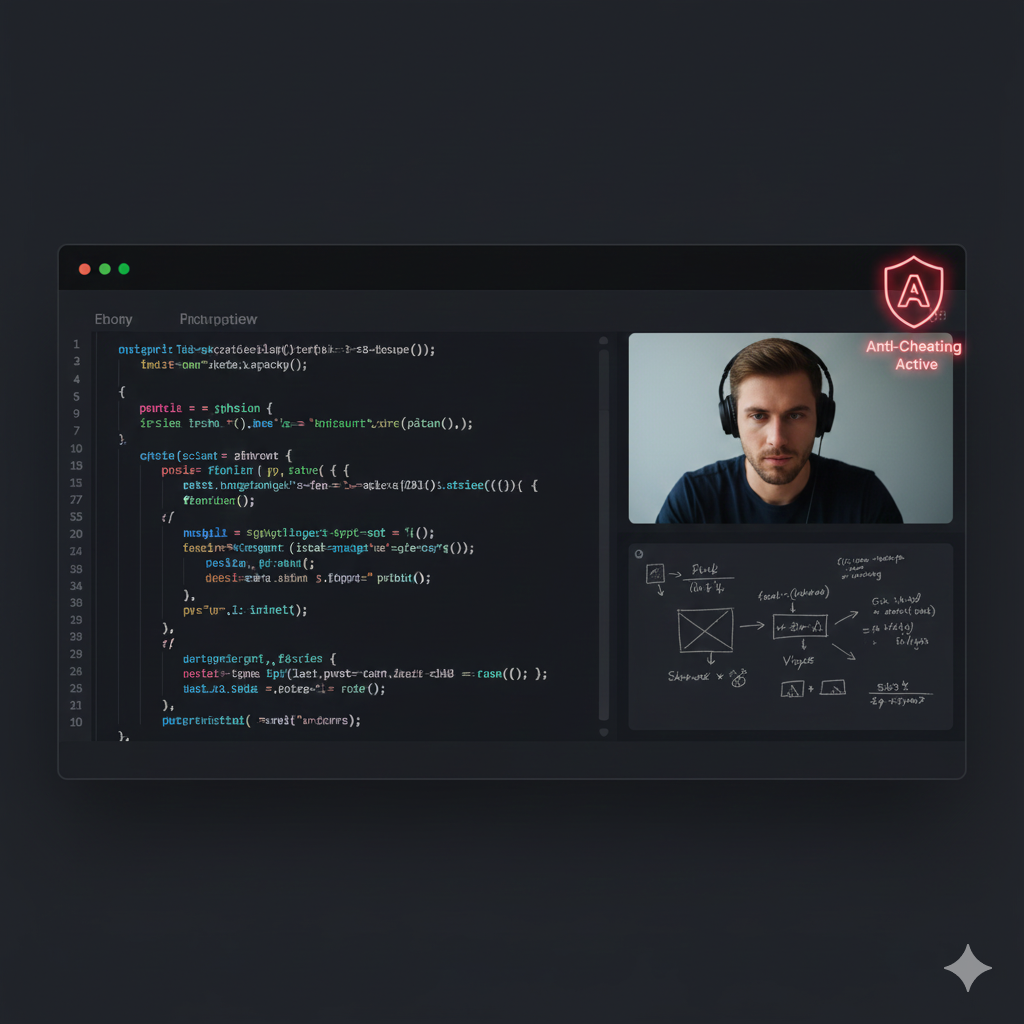

Where IntegrityLens fits

IntegrityLens AI unifies the pipeline so your "GenAI allowed" policy is enforceable without stitching tools together. It combines ATS workflow, biometric identity verification, fraud detection signals, AI screening interviews, and coding assessments in one defensible system used by TA leaders, recruiting ops, and CISOs. - Verify identity in under three minutes before the interview starts (typical document + voice + face is 2-3 minutes). - Route candidates by Risk-Tiered Verification, reducing blanket friction and keeping your funnel moving. - Attach Evidence Packs to the ATS record so every step-up and decision is auditable. - Run 24/7 AI screening interviews to validate reasoning and authorship quickly. - Deliver coding assessments across 40+ languages with integrity telemetry and review workflows.

What good looks like in outcomes and governance

If you implement this well, you should see fewer late-stage surprises and less interview waste, without a slowdown in overall time-to-offer. The operational win is a tighter signal-to-noise ratio: most candidates proceed with low friction, while the right small subset gets a fast step-up. Governance that typically gets Legal and Security to sign off: clear candidate disclosure, minimal retention of biometrics (Zero-Retention Biometrics), role-based access to Evidence Packs, and a documented appeal path for disputed integrity flags.

Key takeaways

- Treat GenAI as a permitted tool in many roles, but require proof-of-work to ensure the candidate did the work.

- Use Risk-Tiered Verification to step up checks only when integrity signals warrant it, avoiding blanket friction.

- Score for reproducible, Day 1 work outputs (tests, tradeoffs, reasoning), not trivia that GenAI makes irrelevant.

- Operationalize integrity signals with clear ownership, automated queues, and an appeal path that Legal can defend.

- Anchor every decision to an Evidence Pack in your ATS so speed does not erase defensibility.

Use this as a starting point for Recruiting Ops. It defines what candidates must disclose, which telemetry triggers a step-up, and what gets stored in the Evidence Pack for audit and appeal.

Tune thresholds per role and calibrate against your false positive rate to avoid reviewer fatigue.

version: "2025-12-22"

policy_name: "genai-coding-assessment-allowed-with-proof"

scope:

roles:

- "sales-engineer"

- "revops-analytics"

- "software-engineer"

candidate_disclosure:

required: true

prompt: "Did you use Generative AI tools (Copilot/ChatGPT/etc.) during this assessment?"

allowed_values: ["no", "yes"]

required_when_yes:

- field: "genai_usage_notes"

description: "What did you use it for (scaffold, debugging, refactor, tests, docs)?"

allowed_use:

permitted:

- "syntax_help"

- "refactor_suggestions"

- "test_generation_with_review"

- "debugging_assistance"

disallowed:

- "third_party_human_assistance"

- "outsourced_completion"

- "sharing_prompt_or_solution_publicly"

integrity_signals:

inputs:

- "identity_verification_confidence"

- "device_fingerprint_continuity"

- "paste_event_ratio"

- "active_typing_seconds"

- "submission_complexity_score"

- "explanation_gap_flag" # set by reviewer after short follow-up

risk_tiers:

low:

when:

all:

- identity_verification_confidence: ">=0.90"

- device_fingerprint_continuity: "true"

- paste_event_ratio: "<=0.35"

actions:

- "proceed_to_interview"

- "store_evidence_pack"

medium:

when:

any:

- paste_event_ratio: ">0.35"

- active_typing_seconds: "<180" # illustrative threshold, calibrate per assessment

- device_fingerprint_continuity: "false"

actions:

- "route_to_step_up_queue"

- "require_live_walkthrough"

- "store_evidence_pack"

high:

when:

any:

- identity_verification_confidence: "<0.75"

- explanation_gap_flag: "true"

actions:

- "reverify_identity"

- "run_ai_screening_interview_reasoning"

- "route_to_security_review"

- "store_evidence_pack"

evidence_pack:

must_include:

- "policy_version"

- "candidate_disclosure"

- "identity_verification_result"

- "assessment_telemetry_summary"

- "submission_artifacts" # code, tests, design note

- "step_up_notes_and_outcome"

retention:

biometrics: "zero-retention"

evidence_pack_days: 180

access_controls:

roles_allowed: ["recruiting-ops", "hiring-manager", "security", "legal"]

appeal_flow:

enabled: true

sla_hours: 48

decision_requirements:

- "human_review"

- "documented_reason"

- "candidate_notification_template"Outcome proof: What changes

Before

Coding tests were treated as pass/fail, GenAI use was inconsistently enforced by individual interviewers, and suspicious cases were escalated ad hoc, creating delays and internal disagreement.

After

A written "GenAI allowed with proof" policy, Risk-Tiered Verification, and a step-up queue reduced late-stage surprises while keeping the majority of candidates on the fastest path.

Implementation checklist

- Write an "Allowed AI" policy with explicit boundaries (what is allowed, what must be disclosed, what is disallowed).

- Design prompts that produce verifiable artifacts: tests, logs, design notes, and a short oral walk-through.

- Instrument integrity signals: identity match, device continuity, copy-paste anomalies, and timing outliers.

- Define step-up actions by risk tier: re-auth, live follow-up, or invalidate only with evidence.

- Store an Evidence Pack in the ATS: policy version, timestamps, verification results, and reviewer notes.

- Create an appeal flow to reduce false positives and protect candidate experience.

Questions we hear from teams

- Should we ban GenAI in coding assessments to keep things fair?

- For most modern roles, banning GenAI tends to reduce honesty and increase evasion. A better control is allowing it with disclosure, then requiring proof-of-work artifacts and a short walk-through that confirms understanding.

- What is the cleanest way to detect proxy work without harming candidate experience?

- Do early identity verification, track continuity signals (same verified candidate, same session), and only step up when multiple signals cluster. Then use a short live change request to validate authorship.

- How do we explain this policy to candidates without sounding accusatory?

- Frame it as "tool-realistic" and "fair to everyone": GenAI is allowed, but you must disclose usage and be ready to explain decisions. Publish the policy in the assessment invite so there are no surprises.

Ready to secure your hiring pipeline?

Let IntegrityLens help you verify identity, stop proxy interviews, and standardize screening from first touch to final offer.

Watch IntegrityLens in action

See how IntegrityLens verifies identity, detects proxy interviewing, and standardizes screening with AI interviews and coding assessments.