Adaptive Interview Templates Without Answer Leakage

An operator playbook for difficulty-adaptive interview templates that preserve throughput, prevent content leakage, and keep decisions defensible under audit.

Adaptive difficulty is not a question bank trick. It is an access control policy with rubrics, step-up verification, and an immutable event log.Back to all posts

1. HOOK: Real Hiring Problem

It is Thursday 4:40 PM. Your engineering leaders want same-day interviewer availability because offers are slipping past the weekly close. A candidate completes an async screen, scores well, and your team fast-tracks them to a live interview loop. Two weeks later, a hiring manager flags that the candidate cannot explain their own take-home approach. A second reviewer notes the solution mirrors a known answer pattern from a leaked template. Legal asks the question you do not want in the middle of a quarter-end push: If we are challenged, can we prove who completed each step, who approved the advance, and what evidence the decision was based on? The operational blast radius is predictable: - Audit liability: missing chain-of-custody for interview content, identity, and reviewer overrides. - SLA breach: re-interviews, re-scoring, and escalation queues that cluster right where identity is unverified. - Cost of mis-hire: replacement cost is often estimated at 50-200% of annual salary depending on role, plus cycle-time and team throughput loss. - Fraud exposure: remote hiring fraud is not hypothetical. Industry reporting notes 1 in 6 remote applicants showed signs of fraud in one pipeline, and 31% of hiring managers say they have interviewed someone who later turned out to be using a false identity. Difficulty-adaptive templates are the right response, but only if you implement them as an instrumented control system. Otherwise you get the worst of both worlds: candidates learn the ladder, answers leak, and your reviewers improvise scoring in ways you cannot defend later.

Content control: templates behave like static test banks, not privileged artifacts.

Decision control: difficulty changes are done ad hoc, not by policy.

Evidence control: scores exist, but the underlying proof (who, when, from which device, with what telemetry) is scattered.

2. WHY LEGACY TOOLS FAIL

Legacy stacks treat screening as a set of disconnected tasks instead of a secured workflow. The ATS stores stages, a coding vendor stores scores, and interview notes live in documents or chat. Each system can be "right" locally while the overall process is not defensible. Why the market failed to solve adaptive difficulty without leakage: - Sequential checks slow everything down. Identity is checked late, after access to content has already been granted, forcing rework when risk appears. - No immutable event log across steps. You can see outcomes, but you cannot reconstruct the timeline of gates, overrides, and reviewer actions. - No unified evidence packs. When Legal asks for proof, you assemble screenshots, exports, and notes by hand. That is an integrity liability. - No standardized rubric storage. Teams drift into personalized scoring, making adverse action disputes harder to resolve. - Shadow workflows and data silos. Templates get shared in uncontrolled places, and "equivalent questions" are not actually equivalent, so candidates can memorize paths while you lose comparability.

Time-to-offer becomes hostage to re-review queues.

Template leakage becomes an untracked data breach of hiring content.

Every exception becomes a policy decision made in private, without audit trails.

3. OWNERSHIP & ACCOUNTABILITY MATRIX

Adaptive interviews only work when ownership is explicit and overrides are review-bound. Assign owners and define what is automated versus manually reviewed: - Recruiting Ops owns workflow orchestration, SLAs, and ATS stage governance. They are accountable for time-to-event analytics and queue health. - Security owns identity gating policy, fraud thresholds, access control, and audit policy. They are accountable for evidence retention rules and reviewer permissions. - Hiring Manager owns rubric discipline and question-to-signal alignment. They are accountable for calibrated scoring and documented rationale. Sources of truth: - ATS is the system of record for candidate status, stage timestamps, and approvals. - Interview platform is the system of record for recordings, transcripts, and rubric entries. - Verification service is the system of record for identity events, liveness, document authentication, and device and behavioral signals. In a defensible model, these are not separate narratives. They are stitched into one ATS-anchored audit trail with a tamper-resistant evidence pack per candidate.

Automate: tier assignment, question selection within tier, step-up triggers, evidence capture, and write-backs to ATS.

Manual review: identity exceptions, proxy interview flags, rubric disputes, and any override that advances a candidate despite a failed gate.

4. MODERN OPERATING MODEL

Treat interview templates like secure access management. Candidates do not get access to high-signal content by default. They earn it through identity gates and evidence-based scoring. An instrumented workflow for adaptive difficulty has five properties: The operational goal is not to trap candidates. It is to keep throughput high while ensuring that any decision can be reconstructed: who did what, when, under what policy, and with what evidence.

Identity verification before access: verify identity in under three minutes before the interview starts, and require step-up verification when risk signals spike.

Event-based triggers: promotion to harder tiers is triggered by logged outcomes (score thresholds, telemetry integrity, time-on-task patterns), not reviewer gut feel.

Automated evidence capture: every answer, code run, playback, rubric score, and override is captured as an evidence artifact tied to timestamps.

Analytics dashboards: track time-to-event (invite-to-start, start-to-submit, submit-to-review, review-to-advance) segmented by risk tier.

Standardized rubrics: difficulty changes do not change evaluation standards. Rubrics specify the evidence expected at each tier, so reviewers stay consistent.

Use tiered question pools with controlled equivalence. Multiple prompts map to the same rubric dimensions and expected evidence.

Gate tier promotion on both performance and integrity signals. A high score with low-integrity telemetry does not auto-promote.

Expire access by default. Content links and sessions time out and are bound to verified identity and device context.

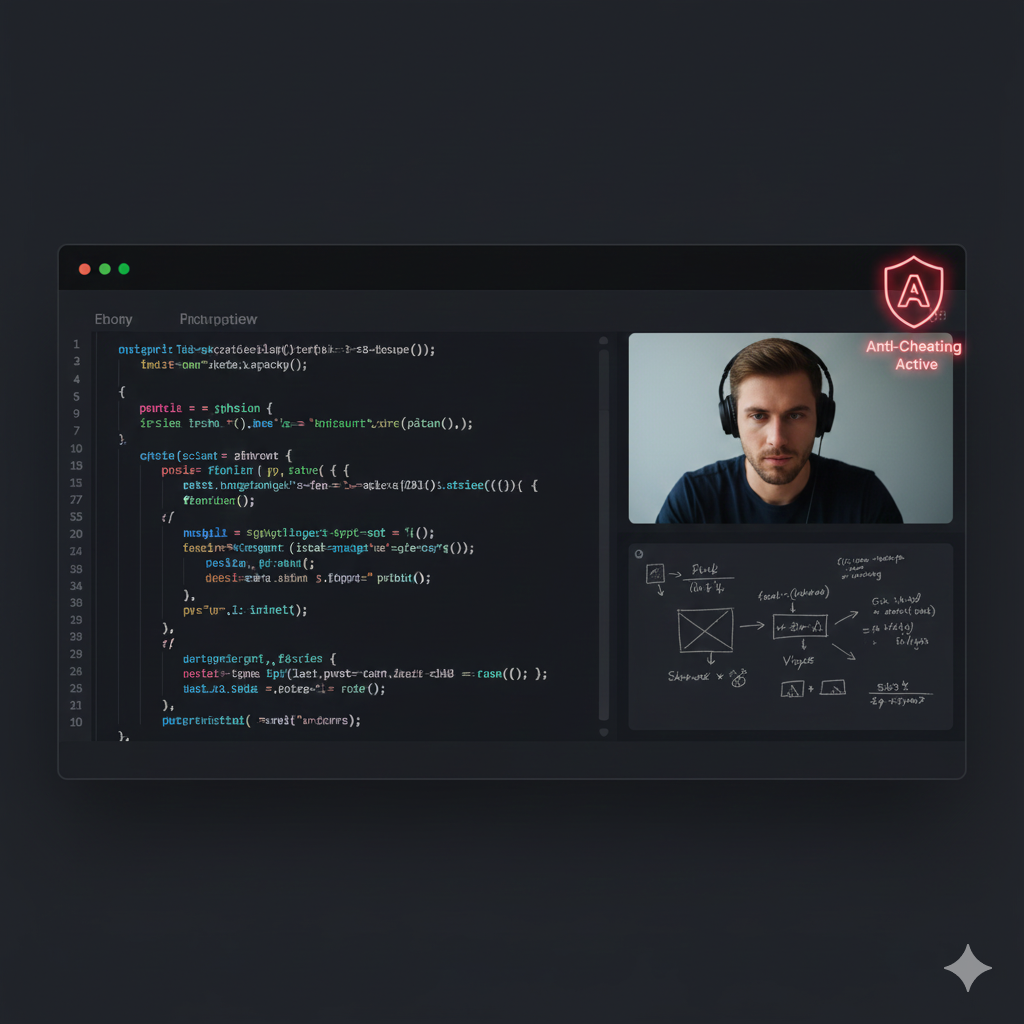

5. WHERE INTEGRITYLENS FITS

IntegrityLens AI supports this operating model as a single hiring pipeline with identity gating, assessment execution evidence, and ATS-anchored audit trails: - AI coding assessments across 40+ languages with plagiarism detection and execution telemetry, so promotion decisions are evidence-based, not vibes-based. - Multi-layered fraud prevention with deepfake detection, proxy interview detection, behavioral signals, and device fingerprinting to support step-up verification when risk changes. - Immutable evidence packs that bundle identity events, timestamps, recordings, code playback, and reviewer notes into one audit-ready record per candidate. - AI screening interviews available 24/7, enabling async-first throughput without losing rubric standardization. - 256-bit AES encryption baseline and SOC 2 Type II and ISO 27001-certified infrastructure foundations for compliance-sensitive rollouts.

Parallelized checks instead of waterfall workflows.

Review-bound SLAs for exceptions and escalations.

Single source of truth for disputes, audits, and post-mortems.

6. ANTI-PATTERNS THAT MAKE FRAUD WORSE

Exactly three failure modes consistently increase leakage and proxy risk: - Using a single visible ladder of difficulty where candidates can predict the next prompt. Predictability is a content leak multiplier. - Allowing manual tier overrides without a logged rationale and second-approver requirement. Manual review without evidence creates audit liabilities. - Reusing "equivalent questions" that are not rubric-equivalent. That breaks comparability and invites legal exposure when outcomes diverge across candidates.

They create unlogged exceptions and inconsistent scoring.

They incentivize answer sharing because prompts become reusable assets.

They force re-interviews, which burn time-to-offer and recruiter capacity.

7. IMPLEMENTATION RUNBOOK

Define tier rubrics and equivalence sets (SLA: 5 business days) - Owner: Hiring Manager (content) + Recruiting Ops (rubric format) - Logged: rubric version, approvers, effective date, mapping of each prompt to rubric dimensions

Configure identity gate before any Tier 2 or Tier 3 access (SLA: automated, immediate) - Owner: Security - Logged: document auth result, liveness result, face match, timestamp, device fingerprint, pass-fail reason codes

Launch Tier 1 async screen with controlled session access (SLA: candidate completes within 72 hours; auto-reminder at 24 and 48) - Owner: Recruiting Ops - Logged: invite timestamp, start timestamp, submission timestamp, access expiration events

Auto-score plus integrity scoring (SLA: under 2 hours to enter review queue) - Owner: System automation; Recruiting Ops monitors queue SLA - Logged: score breakdown, plagiarism signals, execution telemetry, code playback availability, integrity flags

Policy-based promotion to Tier 2 (SLA: same business day review) - Owner: Hiring Manager for rubric review; Security only if integrity flag present - Logged: promotion decision, rubric notes, reviewer identity, time-in-queue

Step-up verification when risk increases (SLA: 4 business hours for manual review when triggered) - Trigger examples: proxy suspicion, deepfake signal, device change, abnormal telemetry - Logged: trigger event, reviewer disposition, supporting evidence artifacts, any access revocation

Tier 2 or Tier 3 prompt selection by rule, not by human choice (SLA: automated, immediate) - Owner: Recruiting Ops (workflow policy) - Logged: question set ID, randomization seed or selection rule, session binding to verified identity

Final decision package and write-back (SLA: 24 hours after loop completion) - Logged: evidence pack generated timestamp, final rubric, approvers, offer decision, any overrides with reason codes If Legal asked you to prove who approved this candidate, you should be able to retrieve a single evidence pack with timestamps and tamper-resistant feedback. If it is not logged, it is not defensible.

Invite-to-start percentile by tier and by region.

Review queue aging segmented by integrity flags.

Override rate and second-approver compliance rate.

Re-interview rate caused by content leakage suspicion.

Related Resources

Key takeaways

- Treat interview templates as controlled access to privileged content. Identity gate before access and log every step.

- Adaptive difficulty must be rule-driven and rubric-anchored. If it is not logged, it is not defensible.

- Use parallelized checks (identity, device, telemetry) to avoid waterfall slowdowns while still escalating risk with step-up verification.

- Design for dispute resolution: code playback, execution telemetry, and tamper-resistant feedback reduce re-review time and legal exposure.

Use this policy as the shared contract between Recruiting Ops, Security, and Hiring Managers.

It defines tier promotion rules, step-up verification triggers, and what must be logged for audit readiness.

version: "1.0"

policy_id: "adaptive-interview-templates"

source_of_truth:

ats: "IntegrityLens ATS"

verification: "IntegrityLens Verification"

assessments: "IntegrityLens Assessments"

tiers:

tier_1:

purpose: "baseline competence"

session_controls:

access_expires_minutes: 90

identity_required: false

promotion_rule:

min_score: 70

integrity_required: "medium"

tier_2:

purpose: "role-relevant depth"

session_controls:

access_expires_minutes: 120

identity_required: true

bind_to_device_fingerprint: true

promotion_rule:

min_score: 80

integrity_required: "high"

tier_3:

purpose: "edge cases and production constraints"

session_controls:

access_expires_minutes: 150

identity_required: true

step_up_verification_required: true

step_up_verification_triggers:

- trigger: "device_fingerprint_changed"

action: "require_liveness_recheck"

- trigger: "proxy_interview_signal"

action: "pause_access_and_route_to_security_review"

- trigger: "deepfake_signal"

action: "pause_access_and_route_to_security_review"

manual_override_controls:

require_second_approver: true

approver_roles_allowed: ["RecruitingOpsLead", "SecurityReviewer"]

required_fields:

- "override_reason_code"

- "evidence_reference_ids"

- "timestamp"

logging_requirements:

immutable_event_log: true

events:

- "invite_sent"

- "session_started"

- "identity_check_started"

- "identity_check_passed"

- "identity_check_failed"

- "assessment_submitted"

- "integrity_flag_raised"

- "tier_promoted"

- "manual_override_applied"

- "evidence_pack_generated"

evidence_pack_contents:

include:

- "rubric_version"

- "question_set_id"

- "score_breakdown"

- "execution_telemetry"

- "code_playback"

- "reviewer_notes"

- "identity_events"

retention:

biometrics: "zero-retention"

logs_and_artifacts_days: 365

Outcome proof: What changes

Before

Static templates were reused across teams. Difficulty adjustments were done ad hoc in interviewer notes. Identity checks were inconsistent and often occurred after candidates had already accessed higher-signal questions. Disputes required manual evidence collection across systems.

After

Implemented tiered templates with rubric-equivalent pools, identity gating before Tier 2 access, and step-up verification on integrity triggers. All promotions and overrides were captured in ATS-anchored evidence packs with timestamps and reviewer accountability.

Implementation checklist

- Define difficulty tiers and promotion rules (what triggers step-up).

- Map each question to a rubric and evidence artifact (recording, code playback, telemetry).

- Set review-bound SLAs for exceptions and risk escalations.

- Instrument an immutable event log for every gate, score, and override.

- Rotate content with controlled equivalence, not random novelty.

Questions we hear from teams

- How do we adapt difficulty without creating bias across candidates?

- Lock the evaluation to rubric dimensions, not to specific prompts. Maintain rubric-equivalent pools per tier, version them, and log which pool and rubric version each candidate received. Any override requires a reason code and second approver.

- What is the minimum viable setup to reduce answer leakage?

- Start with two tiers. Gate Tier 2 behind identity verification, rotate question pools weekly, and require that every promotion is logged with a rubric score plus integrity signals (telemetry and plagiarism checks).

- What do we show Legal during an audit or dispute?

- A single evidence pack: identity events with timestamps, the rubric and its version, the question set ID, recordings or transcripts, code playback and execution telemetry, reviewer notes, and any override approvals. If it is not logged, it is not defensible.

Ready to secure your hiring pipeline?

Let IntegrityLens help you verify identity, stop proxy interviews, and standardize screening from first touch to final offer.

Watch IntegrityLens in action

See how IntegrityLens verifies identity, detects proxy interviewing, and standardizes screening with AI interviews and coding assessments.